What is the absolute necessity of Unconnected lookup, if same functionality can be achieved by Connected Lookup Transformation?

The main advantage of using an unconnected lookup is the fact that you can use it in an expression/any other transformation like a “function” in other programming languages. The Lookup Cache is calculated only once as part of the session initialization and hence more efficient, since all subsequent look-ups will re-use the Cache.

The main advantage of using an unconnected lookup is the fact that you can use it in an expression/any other transformation like a “function” in other programming languages. The Lookup Cache is calculated only once as part of the session initialization and hence more efficient, since all subsequent look-ups will re-use the Cache.

a)When you are looking up against a Static dimension (or any table) that is rarely ever updated

The most common example for this is the Date Dimension. Usually loaded once when your Data Warehouse goes to Production and very rarely updated.What makes it even more appealing to use unconnected lookup for Date Dimension is the Date Dimension Role Playing.

Example, An online order can have Order Date, Ship Date, Cancelled Date, Recieved Date and so on and the same Date Dimension table plays multiple roles.

Without an unconnected Lookup, here’s how your mapping would look..Also note that the Lookup Is being done on the same table internally (DATE_DIM), but the cache is being calculated one for each lookup.

Example, An online order can have Order Date, Ship Date, Cancelled Date, Recieved Date and so on and the same Date Dimension table plays multiple roles.

Without an unconnected Lookup, here’s how your mapping would look..Also note that the Lookup Is being done on the same table internally (DATE_DIM), but the cache is being calculated one for each lookup.

Using an unconnected Lookup For Date Dimension, this is how it would be transformed..

As you can see, this promotes greater reuse ,a less complex mapping and is more efficient becuase of lesser Caching.

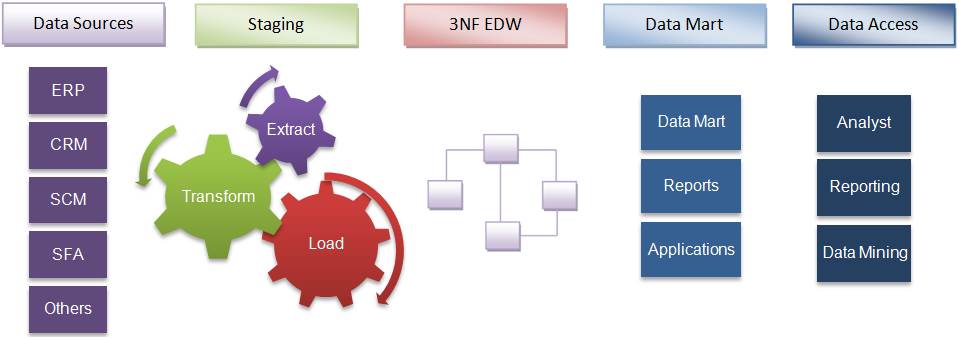

b) When you are looking up against a Base/Conformed Dimension that is loaded before any of the Facts are loaded.

Another example (one which is not static Data) is looking up against any customer Master Data/Conformed Dimension. Your DataWarehouse can have many Confirmed Dimensions, which are loaded before any of the Fact Loads.

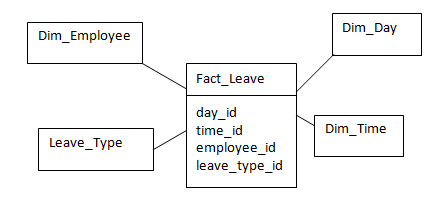

One such example is the Employee Dimension. You can lookup the employee key multiple times in a given mapping and this makes a great candidate for Unconnected Lookup.

One such example is the Employee Dimension. You can lookup the employee key multiple times in a given mapping and this makes a great candidate for Unconnected Lookup.

c) The logic used in the Lookup-override is required at a lot of other places.

This is more of a good coding practise than an Informatica specific “tip”. If your lookup involves a lookup override and you calculate the actual fact using a formula instead of using a direct column value, it would make sense to get the result using an unconnected lookup and use it at multiple places using a :LKP expression.

This is more of a good coding practise than an Informatica specific “tip”. If your lookup involves a lookup override and you calculate the actual fact using a formula instead of using a direct column value, it would make sense to get the result using an unconnected lookup and use it at multiple places using a :LKP expression.

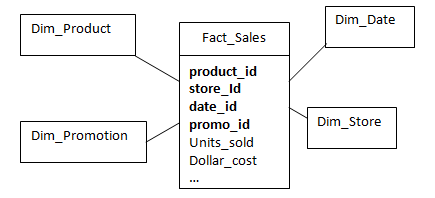

One such example is when you want to Lookup Revenue based on the Status of the Order instead of a direct Lookup, and you have a look-up override like the one below.

So if your lookup data is constant during your load and you have a good case for reuse, Unconnected Lookup can be very useful, both in terms of maintainability and efficiency.

Courtesy:ETL Developer